09 May 2025

Incident Overview

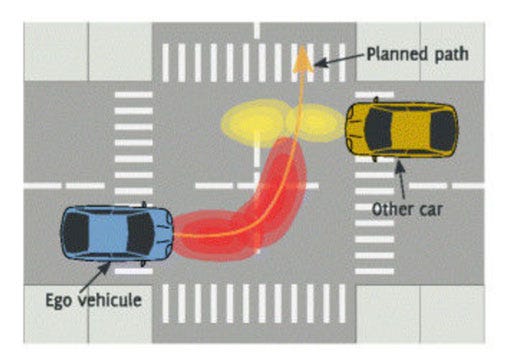

On the night of March 18, 2018, in Tempe, Arizona, Elaine Herzberg was crossing a four-lane road with her bicycle, outside a designated crosswalk, near the Red Mountain Freeway. An Uber test vehicle, operating in autonomous mode, collided with her. The modified Volvo XC90, monitored by a human safety driver, was traveling at approximately 70 km/h (43 mph). Herzberg was rushed to a hospital but succumbed to her injuries, marking the first recorded pedestrian fatality caused by an autonomous vehicle. Five to six seconds prior to the collision, the vehicle’s system detected an object but, unable to classify it, dismissed its initial evaluation. At 2.6 seconds, it identified a bicycle, only to reclassify it as another object 1.1 seconds later. The system attempted to navigate around the object but deemed it impossible. An audible warning to decelerate sounded 0.2 seconds before impact. At 0.02 seconds, the safety driver, Vasquez, seized the steering wheel, engaging manual mode, but the action came too late. Debate ensued over the site’s speed limit, with Tempe police citing 35 mph, while some reports suggested 45 mph.

Immediate Consequences and Investigation

In the wake of the tragedy, Uber suspended all autonomous road testing. The U.S. National Transportation Safety Board (NTSB) launched a comprehensive investigation. NTSB’s preliminary findings revealed that six seconds before impact (115 meters), the vehicle, traveling at 43 mph, detected Herzberg. Yet, over the subsequent 4.7 seconds, the autonomous system failed to initiate emergency braking. Typically, a vehicle at 43 mph can stop within 27 meters after braking. However, the system required 25 meters to recognize the need for emergency braking—equal to or exceeding the stopping distance—rendering it ineffective. Legally, human oversight remained mandatory. The safety driver, Vasquez, later pleaded guilty to endangerment, having initially faced negligent homicide charges. The NTSB ultimately attributed the primary cause to Vasquez’s inattention, as she was distracted by work-related messages on her phone.

Industry-Wide Repercussions

The Herzberg tragedy reverberated through the automotive and technology sectors, compelling companies to temper the aggressive rollout of autonomous ride-hailing services. Uber withdrew its autonomous fleet from Arizona, and then-Governor Doug Ducey barred further testing by the company in the state. A legal scholar highlighted the perplexing absence of corporate accountability, noting that despite evidence of technical shortcomings and Uber’s deficient safety culture, criminal liability rested solely with the employee. Over 18 months, Uber’s autonomous test vehicles were involved in 37 collisions, intensifying scrutiny of its testing protocols. In Herzberg’s case, the system identified the bicycle a mere 1.2 seconds before impact, insufficient for evasive action. The incident stemmed from a confluence of factors: inherent system limitations, disabled safety features like automatic emergency braking, human error, and deficiencies in infrastructure and regulation. The NTSB’s detailed report elucidated these contributors. The resulting public and industry backlash profoundly shaped the trajectory of autonomous vehicle development and public perception, underscoring safety’s paramount role in fostering trust and driving technological adoption. The tragedy laid bare the limitations of early autonomous systems in navigating “edge” cases, exemplified by the system’s struggle to classify Herzberg and her bicycle, setting the stage for ongoing discourse on edge case challenges.

Evaluating Industry Claims

A Chinese automaker, drawing on 2023 laboratory data, asserted that its intelligent driving systems achieved an accident rate per million kilometers approximately one-tenth that of manual driving. Similarly, the world’s highest-valued automaker reports “miles driven between collisions” in its quarterly disclosures. Its 2024 third-quarter safety report indicated that vehicles equipped with Autopilot averaged nearly 7 million miles per collision, purportedly over ten times safer than human drivers.

Waymo’s Incident Data

Waymo, a leader in real-world autonomous mileage, documented 696 incidents involving its vehicles from 2021 to 2024, though not all were attributable to Waymo. Incidents spiked in 2024, predominantly in California and Arizona, typically in 25 mph zones under clear conditions, with vehicles operating in full autonomous mode without human operators. In 2025, one fatality, one serious injury, three moderate injuries, and 11 minor injuries were reported (excluded from the 2021–2024 dataset but noted for context).

NHTSA’s Insights

The U.S. National Highway Traffic Safety Administration (NHTSA) recorded 1,164 autonomous vehicle incidents since 2021, with no human fatalities (save one animal death). Of these, 47 resulted in moderate or severe injuries. Autonomous vehicles have logged roughly 70 million miles on U.S. public roads, a fraction of the over 3 trillion miles driven annually by humans. An autonomous taxi operator reported only two police-reportable collisions in its first million miles, outperforming human drivers. A collaborative report with a major reinsurer showed its autonomous taxis generated 0.78 property damage claims per mile, compared to 3.26 for human drivers. An autonomous trucking firm reported three incidents over a million highway miles, all caused by other drivers, with no fatalities, against 1.6 fatal accidents per 100 million miles for human truck drivers. NHTSA data from July 2021 to May 2024 logged 617 autonomous vehicle collisions, with 79.1% involving other vehicles, 92.9% on dry roads, and 59.5% in daylight.

IIHS Perspectives

A RAND study estimated that autonomous vehicles require hundreds of millions of miles to robustly evaluate safety. Research by the Insurance Institute for Highway Safety (IIHS) found scant evidence that partial automation prevents collisions, framing it as a convenience rather than a safety feature. While systems like automatic emergency braking (AEB) demonstrated tangible safety benefits, partial automation technologies, such as adaptive cruise control (ACC) and lane-keeping assist, showed inconsistent safety gains. One study reported an accident rate of 9.1 per million miles for autonomous vehicles, compared to 4.1 for human-driven vehicles, though autonomous incidents were generally less severe, often resulting in minor injuries.

The “tenfold safety” narrative, while grounded in specific datasets, demands cautious interpretation given the limited mileage of autonomous vehicles relative to human-driven counterparts. Although autonomous systems currently exhibit lower accident rates per million miles, their cumulative mileage pales in comparison to human-driven miles, suggesting that existing data may not suffice for sweeping, long-term safety claims. Divergent metrics—accidents, collisions, incidents, or property damage claims—across organizations complicate direct comparisons. Preliminary data indicates strong performance in controlled or routine scenarios, such as clear-weather highway driving, but complex or rare situations require further scrutiny, as evidenced by the Herzberg tragedy and edge case debates. Waymo’s data reveals elevated incident rates in urban settings (e.g., San Francisco, Phoenix) and low-speed zones, contrasting with autonomous trucking’s favorable highway outcomes, highlighting the influence of operational design domains (ODD) on safety. IIHS findings challenge the safety benefits of current driver assistance systems, stepping stones to full autonomy, suggesting that even advanced features may not yet significantly reduce accident rates.

Table 1: Autonomous vs. Human Driving Accident Rates

| Data Source | Metric Used | Autonomous Rate | Human Rate (when comparable) | Data Year | Conditions/Context |

|---|---|---|---|---|---|

| Chinese Automaker | Accidents per Million Kilometers | ~1/10 of manual driving | 10x autonomous rate | 2023 | Laboratory data |

| Top-Valued Global Automaker | Miles Driven Between Collisions | ~7M miles/collision | ~1/10 of autonomous rate | 2024 Q3 | Vehicles using Autopilot |

| Waymo | Incident Count | 696 incidents, 2021–2024 | N/A | 2021–2024 | Most incidents not Waymo’s fault |

| Waymo | Accidents per Million Miles | ~16.4 (696 incidents/42.3M miles) | 4.1 | 2021–2024 | Estimated mileage |

| NHTSA | AV-Involved Incidents | 1,164 since 2021 | N/A | 2021–present | No fatalities (except one animal) |

| Autonomous Taxi Company | Police-Reported Collisions per Million Miles | 2 incidents | Higher than autonomous | Initial 1M miles | |

| Autonomous Taxi Company | Property Damage Claims per Mile | 0.78 claims | 3.26 claims | N/A | |

| Autonomous Trucking Company | Incidents per Million Miles | 3 incidents | 108 incidents (truck drivers) | N/A | All incidents caused by others |

| Research Study | Accidents per Million Miles | 9.1 accidents | 4.1 accidents | 2019 | Cited study |

Note: Waymo’s mileage is estimated from 6.1 million miles in California in 2020, assuming growth contributing to NHTSA’s 70 million total AV miles. This table juxtaposes data and metrics from varied sources, not a direct like-for-like comparison.

Dominance of Human Error in Accidents

A traffic safety white paper from the China Automotive Technology and Research Center noted that from 2017 to 2020, China averaged 2.35 million road accidents annually, resulting in 62,900 deaths and 243,800 non-fatal injuries, with over 90% attributed to driver error. The U.S. NHTSA estimates that approximately 94% of vehicle collisions stem from human error, with other estimates ranging from 93% to 98%. Human error encompasses a spectrum of behaviors and decisions deviating from safe driving practices.

Common Categories of Human Error

Distracted Driving: Activities like texting, calling, eating, or adjusting in-car controls divert attention.

Speeding: Exceeding speed limits or driving too fast for conditions limits reaction time and increases collision severity.

Impaired Driving: Alcohol or drugs impair judgment, coordination, and reaction time, elevating accident risk.

Ignoring Traffic Signals: Running red lights or disregarding stop signs endangers all road users.

Poor Decision-Making: Failing to yield, improper lane changes, or misjudging other drivers’ speed or distance.

Fatigue: Often underestimated, fatigue impairs rapid response and sound decision-making.

Inexperience: Novice drivers face higher risks due to limited skills and unfamiliarity with roads or traffic patterns.

These errors often involve subjective misjudgments, such as failing to perceive risks, or limitations in drivers’ ability to handle internal or external distractions.

How Autonomous Systems Aim to Eliminate Human Error

Autonomous systems are programmed to strictly adhere to traffic regulations, eliminating intentional violations like speeding or running red lights. They operate on defined rules and algorithms, free from subjective errors. Unaffected by emotions, fatigue, or substances, they deliver consistent, predictable driving behavior. Compared to humans, autonomous systems may possess superior environmental awareness and faster computational and decision-making capabilities.

Data across regions consistently show that human error drives the vast majority of traffic accidents, providing a compelling rationale for autonomous technology’s potential to significantly enhance road safety. Understanding prevalent human errors—distraction, fatigue, misjudgment—clarifies how autonomous systems can improve safety by mitigating these factors. However, transitioning from human to autonomous driving does not eliminate errors but shifts their source. As the Herzberg incident demonstrated, autonomous systems introduce new errors tied to software, hardware, and sensor limitations. The challenge lies in ensuring these new errors are less frequent and severe than pervasive human errors.

Sensor Suite

Cameras: High-resolution digital cameras provide visual data, identifying objects, detecting lanes, and interpreting traffic signals. Variants like near-infrared (NIR), visible light (VIS), thermal imaging, and time-of-flight cameras offer distinct advantages but struggle in adverse weather (rain, snow, fog) or low-light conditions.

LiDAR: Emitting laser pulses, LiDAR creates detailed 3D point clouds, delivering precise depth and spatial awareness. It offers high accuracy, 360-degree coverage (with rotating units), and effectiveness in darkness but is hampered by smoke, dust, rain, and higher costs compared to cameras.

Radar: Using radio waves, radar detects object positions and motion, providing reliable speed and distance calculations. It excels in all-weather conditions and long-range detection, ideal for highways, but offers lower precision in object modeling than LiDAR or cameras.

Ultrasonic Sensors: Employing high-frequency sound waves, these detect nearby objects for short-range tasks like parking or low-speed driving. Their range and field of view are limited, and performance is affected by temperature, dirt, or heavy precipitation.

GPS/GNSS and IMUs: Used for geolocation and motion tracking, respectively.

Sensor Fusion

Sensor fusion integrates data from multiple sensors to achieve a more comprehensive, accurate, and reliable environmental understanding than any single sensor. It operates at various levels (raw data, object, decision) and offers:

Compensation for individual sensor limitations (e.g., radar offsetting camera issues in poor weather, LiDAR enhancing radar’s ranging with 3D detail).

Improved object detection accuracy, reducing false positives/negatives.

Robust, fault-tolerant systems.

3D environmental modeling.

For instance, combining radar and cameras enables object classification with speed and distance measurements, while radar and LiDAR confirm motion and object shapes.

4Decision Architectures

Modular Architecture: A traditional approach dividing tasks into perception, prediction, planning, and control, with data flowing sequentially. It offers strong interpretability, traceability, and ease of integrating safety rules but risks cumulative errors and struggles with complex, dynamic scenarios.

End-to-End Architecture: A newer method using a single neural network to map sensor inputs directly to driving commands, bypassing explicit modules. It simplifies structure, avoids cumulative errors, and leverages complex patterns but lacks interpretability, verifiability, and robustness in rare scenarios.

Hybrid Architecture: Combining modular and end-to-end strengths, exemplified by Huawei’s ADS 3.0. This end-to-end system learns from real-world scenarios to emulate and surpass human driving. Its GOD (General Obstacle Detection) network ensures precise environmental understanding, while the PDP (Prediction and Decision Planning) network seamlessly bridges perception and decision-making. Huawei’s cloud-based “World Engine” generates challenging virtual scenarios for training, complemented by a vehicle-side “World Behavior Model,” a foundational intelligent driving model. A “Instinctive Safety Network” adds redundancy for extreme cases. Huawei’s ADS 3.0 integrates proprietary hardware and software, equipped with extensive sensors, including LiDAR, radar, and cameras.

The redundancy and complementarity of sensor suites are critical for robust perception in diverse, challenging real-world conditions. Combining sensor modalities offsets individual weaknesses. The shift from modular to end-to-end decision architectures reflects a move toward data-driven approaches, but it introduces challenges in ensuring safety and reliability, particularly in interpretability and handling unexpected events. Hybrid approaches like Huawei’s ADS 3.0 strive to balance these paradigms. Continuous improvement hinges on access to vast, high-quality real-world data for training and validation, as underscored by Huawei’s cloud-based training and rapid iteration capabilities.

.png?width=880&name=S1%20(2).png)

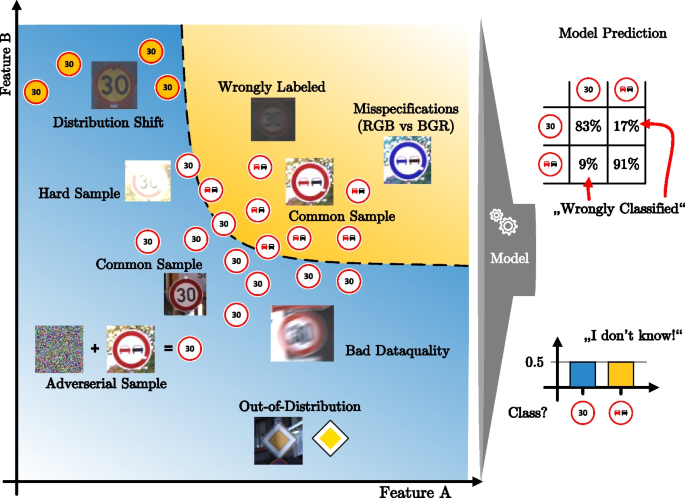

Defining Edge and Corner Cases

Edge cases are rare scenarios at extreme operational parameters (maximum or minimum). Corner cases occur when multiple parameters reach extreme levels simultaneously, placing the system at the “corners” of its operational space. Though infrequent, these scenarios can expose system limitations and potential failures.

Examples in Autonomous Driving

Unusual road obstacles (construction equipment, fallen trees, animals).

Unpredictable pedestrian behavior (sudden road crossing, children playing near roads).

Challenging weather (heavy rain, fog, snow obscuring lane markings).

Complex road configurations (faded lane markings, unconventional intersections).

Novel pedestrian appearances (e.g., Halloween costumes).

Extreme weather events (tornadoes, blizzards).

Combinations like icy roads, low-angle sunlight, heavy traffic, and pedestrians.

Why These Pose Challenges for AI

Standard training data may not encompass these rare, unpredictable scenarios. AI models trained on common scenarios struggle to generalize to unusual cases. Replicating, testing, and optimizing for these extreme configurations is costly and complex. End-to-end models, while powerful, may exhibit uninterpretable behavior or “AI hallucinations” in such cases, producing unexpected outputs.

Strategies for Identification and Mitigation

Brainstorming potential edge and corner cases during design.

Using data visualization and clustering to identify rare scenarios in large datasets.

Leveraging simulation environments to create and test diverse edge and corner cases, which are difficult or dangerous to encounter in real-world testing.

Implementing “corner case detection” to identify scenarios where system performance may degrade.

Huawei’s approach of generating thousands of extreme cases via cloud training for vehicle-side AI learning.

Continuously updating AI models based on real-world experiences and newly identified edge cases.

The ability to handle numerous rare but critical edge and corner cases is a linchpin of autonomous vehicles’ overall safety and readiness for widespread deployment. Failures in these scenarios can have severe consequences and erode public trust. Relying solely on real-world data may not cover the vast array of potential edge cases. Simulations play a vital role in generating diverse scenarios and enabling rigorous testing in safe, controlled settings. The data-driven nature of end-to-end systems versus the need for interpretability and safety assurances creates a notable tradeoff, particularly in edge and corner cases. Huawei’s “Instinctive Safety Network” exemplifies a rule-based fallback mechanism to address extreme scenarios.

Defining Redundancy in Autonomous Vehicles

Redundancy involves incorporating backup components or systems to ensure continued operation if primary components fail. In autonomous vehicles, redundancy is essential for enhancing reliability and safety, as failures can lead to catastrophic outcomes.

Types of Redundancy

Sensor Redundancy: Multiple sensors (same or different types) cover the same operational range. For example, cameras, LiDAR, and radar enable environmental perception, with others compensating if one fails or underperforms.

Computational Redundancy: Duplicated processing units ensure that a secondary unit can assume safety-critical functions if one fails, such as dual independent computing platforms running autonomous software.

Control System Redundancy: Backup mechanisms for critical systems like steering, braking, and acceleration, including redundant braking (electromechanical and hydraulic) and steering systems with backup power.

Communication Redundancy: Multiple communication pathways ensure reliable data transmission, such as fallback methods if cloud connectivity is lost.

Power and Energy Storage Redundancy: Independent power sources for critical systems prevent total failure from a single power source loss.

Autonomous Driving System (ADS) Redundancy: Duplicating the vehicle’s “brain,” including computers and sensor arrays, to ensure continuous operation.

Benefits of Redundancy

Ensures safe operation despite component failures.

Enhances overall system reliability and fault tolerance.

Provides safeguards in unexpected or challenging scenarios.

Challenges of Implementation

Increased development and manufacturing costs.

Added weight and space requirements within vehicles.

Complexity in managing and coordinating redundant systems.

Redundancy is a cornerstone of autonomous vehicle safety, particularly as vehicles advance toward higher automation levels where human intervention is no longer expected or feasible. It serves as a critical safety layer, mitigating risks from system failures. However, implementing redundancy requires balancing cost, weight, and complexity against safety maximization. Advances in AI and sensor fusion can enhance redundancy’s effectiveness through intelligent fault detection and seamless transitions between primary and backup systems.

Technology Adoption Lifecycle

The technology adoption curve delineates how different groups embrace new technologies over time:

Innovators (2.5%): Risk-tolerant enthusiasts eager for new technologies.

Early Adopters (13.5%): Trendsetters willing to take risks to form independent opinions.

Early Majority (34%): Interested but evidence-driven, relying on reviews and case studies.

Late Majority (34%): Skeptical, adopting only when technology is mature and widely used.

Laggards (16%): Resistant to change, adopting only when compelled or ubiquitous.

The “Chasm” Theory

Geoffrey Moore’s “Crossing the Chasm” theory highlights a significant gap between early adopters (visionaries) and the early majority (pragmatists) for high-tech products. Their expectations and motivations differ markedly. Transitioning from early to mainstream markets requires shifting focus from technology to human needs.

Autonomous Driving and the Adoption Curve

Autonomous driving technology is likely at or nearing the “chasm,” capturing the interest of innovators and early adopters but facing skepticism from the early majority. This group and more conservative segments prioritize maturity, reliability, and proven safety records. Incidents like Herzberg’s death amplify concerns, making the chasm harder to cross. Many remain unexposed to autonomous driving and are reluctant to trust machines over human drivers.

Fostering Public Trust

Demonstrating robust safety and reliability is pivotal to building trust and encouraging broader adoption. Clear communication of autonomous systems’ capabilities and limitations is essential, as is addressing public concerns and ensuring transparency in development and testing.

The “chasm” framework illuminates the challenges autonomous driving faces in achieving widespread adoption. It must move beyond appealing to tech enthusiasts and visionaries to demonstrate tangible benefits—chiefly safety—to the pragmatic early majority. Public perception is heavily influenced by media coverage of accidents, however statistically rare. The sentiment that “a one-in-a-million chance becomes 100% when it happens to you” underscores the trust challenge for safety-critical technologies. Crossing the chasm demands not only technical advancements and verifiable safety improvements but also effective communication to alleviate concerns, build trust, and highlight practical benefits.

Ongoing R&D Efforts

Advancements in sensor technologies, such as solid-state LiDAR and AI-enhanced sensor fusion.

Continuous development of AI algorithms for perception, prediction, and planning.

Growing adoption of end-to-end and hybrid architectures.

Emphasis on large-scale data collection, cloud-based training, and rapid AI model iteration.

Development of advanced simulation environments and scenario databases for rigorous testing.

Role of Regulatory Frameworks

Evolving regulations and safety standards for autonomous vehicles are critical. Government oversight and independent testing ensure system safety. China’s efforts in establishing standards and promoting intelligent connected vehicles are noteworthy.

Importance of Rigorous Testing and Validation

Extensive testing across diverse real-world conditions and challenging scenarios is essential. Virtual testing and simulations complement real-world efforts, with billions of miles needed for statistical confidence in safety.

Continuous Learning and Improvement

Autonomous systems must evolve through real-world data, updating models to enhance performance and safety. Over-the-air updates are crucial for deploying improvements.

Addressing Ethical and Societal Considerations

Ongoing discussions about ethical dilemmas and societal impacts of autonomous vehicles are vital.

Achieving true autonomous driving safety is an iterative process, requiring relentless technological advancement, robust regulatory frameworks, rigorous testing, and a commitment to learning from real-world successes and failures. Collaboration among industry, government, and research institutions is essential to establish safety standards, share best practices, and accelerate the development of safe, reliable autonomous technologies. While preventing accidents caused by autonomous vehicles is a priority, safety also involves managing interactions with human drivers and addressing potential misuse of driver assistance systems, precursors to full autonomy.

Autonomous driving technology holds immense potential to enhance road safety by reducing human error. Significant strides have been made, with manufacturers touting ambitious safety claims. However, these assertions must be scrutinized, considering the limitations of current data due to restricted mileage and diverse operating conditions. Challenges persist in addressing edge and corner cases and ensuring system robustness across all real-world scenarios. Redundancy is a linchpin in building safety-critical autonomous vehicles. Public trust remains paramount, necessitating ongoing efforts to demonstrate safety and reliability to achieve widespread adoption. While autonomous driving offers transformative promise for road safety, realizing a fully safe and ubiquitous autonomous future is an evolving journey, demanding continuous innovation, rigorous testing, and careful consideration of technical and societal factors.